ChatGPT: Can teachers tell when students cheat using AI?

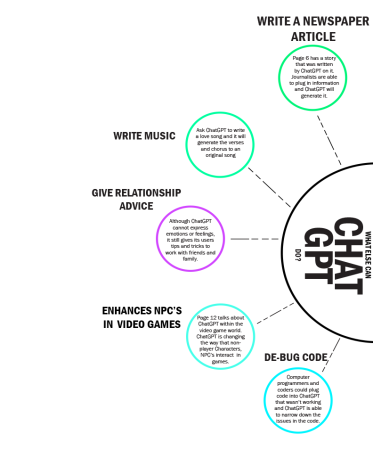

Ask the emerging artificial intelligence machine ChatGPT (Chat Generative Pre-trained Transformer), to formulate an essay, personal narrative, or lab report, and within seconds a document will be produced. This poses a potential threat to the academic integrity of students who may use this AI program to cheat.

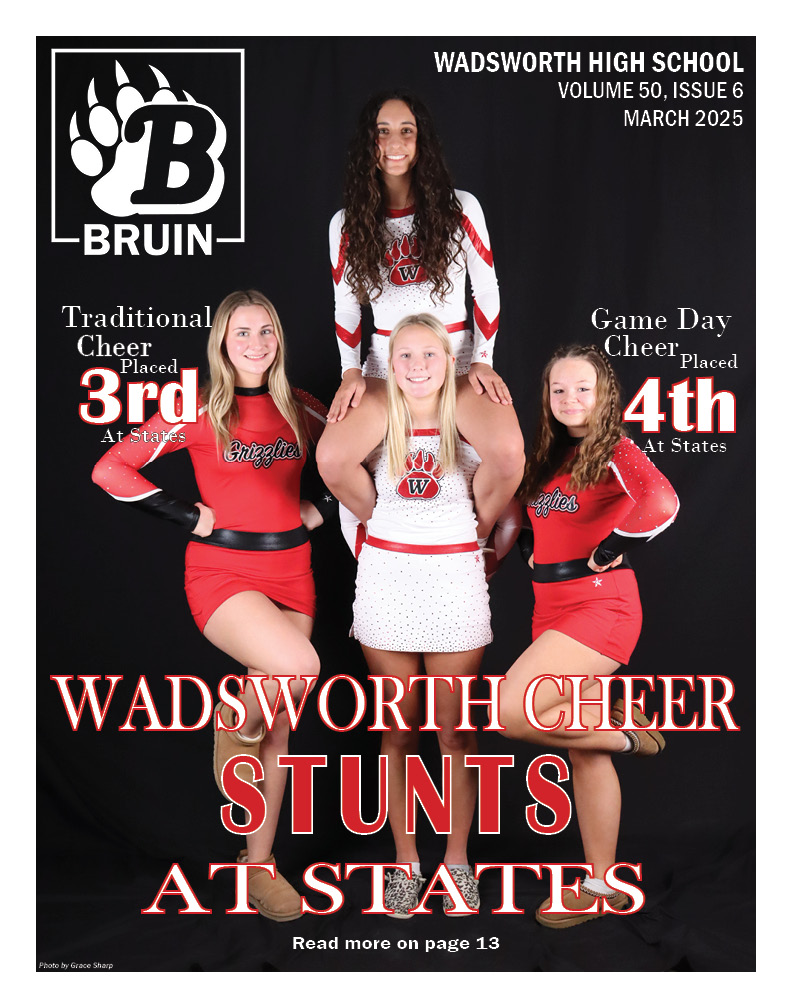

OpenAI, the parent company of ChatGPT, is a research center that specializes in the use of artificial intelligence. Their mission statement is “to ensure that artificial general intelligence benefits all of humanity”.The Bruin devised an experiment to test how much ChatGPT can influence academic integrity and to test if teachers were able to tell the difference between a paper written by a student versus a paper written by AI.

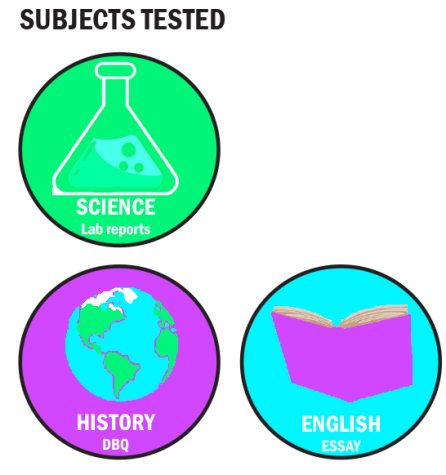

In order to formulate this experiment, the Bruin picked a teacher from the English, History, and Science departments at Wadsworth High School to be put to the test. The teachers chosen were Mrs. Laura Harig from the English department, Mr. Jason Knapp from the history department, and Mr. Aaron Austin from the science department.

All teachers had upcoming papers due in their classes. As each assignment was due, the Bruin collected the papers instead of the teachers. Each teacher was given back the papers that were due, but the catch was that hidden inside the assignments were a certain amount of ChatGPT-generated papers. The Bruin held onto the real papers while the teachers decided which papers they believed to be AI.

Harig was given three essays that were written by ChatGPT for her 9th grade Honors English class. Harig was able to correctly select the ChatGPT papers, and she mentioned various tip-offs that alerted her to a potentially fake paper.

“It was very formulaic, where it was all the same, so that kind of clued me in,” Harig said.

Upon ChatGPT producing a paper, the user is able to alter the essay to their liking. For the purposes of this study, Bruin did not change the contents of the essay that ChatGPT made, and Harig noticed certain repetitions in the papers that hinted at AI writing.

“They were very similar,” Harig said. “There were some phrases that were used in all three of the papers that didn’t really sound like the student, but the fact that they all showed up [alerted] me.”

Learning to express your own unique author’s voice and tone is something that students are encouraged to do in any English class. Knowing your audience and diction control are some things that will change how a paper is written. For example, the word choice that a student would use for a high school paper is not the same word choice that a student would use for a college-level paper.

“I honestly believe that I picked up on it because it’s February and I’ve been reading their stuff all year, but if they had started – not that I’m giving them points on how to use it – but if they had started using that and then used it all year, then I don’t know that I would have noticed,” Harig said

ChatGPT launched in November 2022 and first made headlines in January 2023. Though now is the time that ChatGPT has become popular, it is already halfway through the 2022-2023 school year. Teachers have already established the writing style of their students.

“I think for students it’s a tool to make life easier, but for teachers, I think it is kind of a threat to academic integrity because they’re not writing their own papers,” Harig said. “I think that there’s an important mental and critical thinking and problem solving that’s necessary for writing and learning how to write. If we give into this, then students aren’t learning that basic process.”

One of the major concerns that people have with ChatGPT and other AI programs is that AI learns from itself. If teachers are beginning to pick up on what AI papers look like then the AI will adapt itself to fit what the user is asking of it.

All students need to ask ChatGPT is that it writes a paper at a specific level. For Harig’s students, all they need to ask is for ChatGPT to write a paper at a 9th-grade level and then give ChatGPT the prompt and any other information that the student is required to put into their paper.

“I do think that the more [ChatGPT] grows, I think it will catch on and I think the fact that we can’t really keep up with how it improves, I think eventually, people will start using it,” Harig said.

In the case of the science department, Austin was given one ChatGPT-generated lab report. The lab reports were pulled from Austin’s AP Physics class which consists of upperclassmen.

The lab was a test with an egg and a rubber band and the Bruin asked ChatGPT to generate a lab report for the experiment which proved to be difficult due to the number of equations and specific information that had to be inputted into the AI program. Austin was able to properly identify which version the ChatGPT-generated lab report but said it was a “tough call”.

“Because I’ve had them [students in class] for a year, I kind of know some of their different writing styles because they’ve had to do this multiple times. That helps in knowing how they are going to write stuff. But if they used that [ChatGPT] the whole time, then that would never work,” Austin said.

Austin had some difficulty telling the difference between the real and generated lab reports because the lab reports were done in lab groups, meaning that multiple people were writing one report, as a result, the writing styles varied.

“I wrote on the lab report ‘it seems to be changing here’, and I was like, is that the program screwing up? Because I knew I had some fake ones or is that just different writing styles? That made it hard for me to tell which ones they were,” Austin said.

Austin’s findings again showed that

students need to have a basic understanding of what they are learning in order to plug the information into the ChatGPT programs. This poses the question that if students need to understand the content to cheat, then why not just use their knowledge and write the paper themselves instead of having an AI program write it for them?

Knapp teaches AP United States Histor

y and in preparation for the end-of-year AP test, his students write practice essays. For this experiment, Bruin worked with the AI program to create a DBQ, a document-based question. In order for students to write these DBQ’s they are given a prompt and 7 documents that the students have to use to support their thesis on the prompt.

ChatGPT told the Bruin that it was familiar with writing DBQs and took the documents and analyzed them. Knapp has his students highlight their DBQ’s for different sections, a certain color for a thesis, a certain color for quotes and quote explanations, and so on. A Bruin member who had taken Knapp’s class last year went through and highlighted the ChatGPT-generated copy so that Knapp would not be able to tell the difference based on one not being highlighted.

Knapp incorrectly chose which paper was generated by ChatGPT.

“There were a couple other [essays] that might have been close, but I was pretty confident. With the way it just ended abruptly I’m like ‘okay, this is a computer,’” Knapp said.

Knapp took a different approach when determining which student’s paper he believed was fake. Instead of trying to find an essay that was too well written to be believable, he was looking for one that mimicked the errors and style of an average student.

“I was looking for tenth-grade writing,” Knapp said. “And I could be going about it the wrong way. But maybe it is modeling tenth-grade writing, which makes it difficult to find.”

The nature of the DBQ made it challenging for Knapp to pick out an essay that didn’t sound right. The essay has a very specific rubric that explains what evidence must be used and how it should be incorporated. Due to this, the unique voices of students are unable to come through in their writing.

“It’s hard to find in this essay because the DBQ is so objective,” Knapp said. “You’re leaving all that subjectivity out. You’ve got the evidence, and that’s what you have to use.”

The one place AI struggles is being able to mimic the “humanness” of a real person’s writing.

“Would AI ever write a history book? I don’t know,” Knapp said. “I’m sure it could do all the research online, but I’m just not sure that it could put together that great story that a skilled historian becomes really good at.”

ChatGPT poses a threat at levels outside of high school as well. Some professors at the collegiate level are worried about the effects that AI programs will have on college-level students.

Brianne Pernod teaches CCP, college credit plus, classes for the University of Akron at Wadsworth High School. Pernod teaches Comp 1 and Comp 2 to sophomores, juniors, and seniors.

“I think it’s important that [educators] figure out a way to work with the technology and also have conversations with the students about the appropriateness, the use of it, the necessity, that kind of stuff.” Pernod said. “It’s not going away, so we definitely have to find a way to work with it.”

The University of Akron has changed their plagiarism policy in order to combat the use of AI plagiarism. The use of any AI program along with ChatGPT can have many consequences and even lead to failure of that course.

“[Professors need to] have open conversations with your college kids about why it’s so important to have academic integrity, because of the expectations for the college kids,” Pernod said.

Pernod explains how she would hope that students would not use ChatGPT in any college-level class because high school classes are required, but college is not and students are there by choice.

“I think right now it’s a threat because it’s such a new technology that we don’t necessarily know how to utilize it in the classroom yet, but I think we would be naive to think that returning to paper-pencil is ever going to happen,” Pernod said. “I think that it [AI] is good, it has the potential for good, but as with anything, in the wrong hands, or used nefariously, it is harmful.”

AI will continue to grow and learn from itself as time goes on, and as the concern for cheating grows, technology companies are attempting to devise programs to prevent or weed out this threat in an academic setting.

There are already competitive AI programs being created that were made as a response to ChatGPT. Google made a statement saying they would soon be launching an AI chatbot named Bard.

There are even some AI programs that teachers can run on students’ papers in order to tell if the paper is truly written by the students or if it is written by AI.

“We’ve trained a classifier to distinguish between text written by a human and text written by AIs from a variety of providers,” says OpenAI’s ChatGPT classifier website.

While the classifier is still in the early stages of development with constant updates, it demonstrates that companies such as OpenAI see the potential threat posed by artificially generated text and are working to counter the problem before it grows out of control.

Your donation will support the student journalists of Wadsworth High School. Your contribution will allow us to purchase equipment and cover our annual website hosting costs.

Haley has been on the staff for all four years of high school. She started out as a staff writer and wrote primarily news stories. Her junior year she...

![Wadsworth's Class Of 2025 Walks At Graduation Ceremony [Photo Gallery]](https://wadsworthbruin.com/wp-content/uploads/2025/05/IMG_9018-1-1200x800.jpg)